The Disease Models Were Tested and Failed, Massively

One year ago this week the world embraced a lockdown strategy premised on the epidemiology modeling of Imperial College-London (ICL). In a March 16, 2020 report by physicist and computer modeler Neil Ferguson, the ICL team predicted catastrophic death tolls in the United Kingdom and United States unless both countries adopted an aggressive policy response of mandating social distancing, school and business closures, and ultimately sheltering in place.

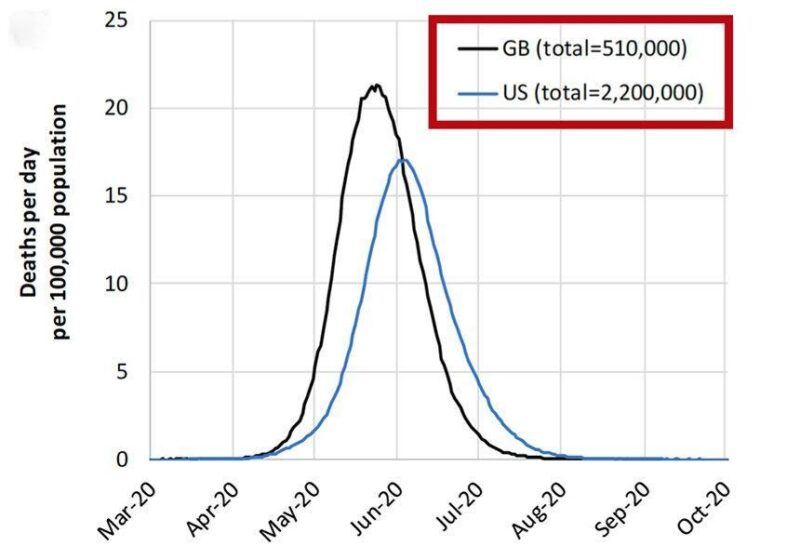

Ferguson’s model presented a range of scenarios under increasingly restrictive nonpharmaceutical interventions (NPIs). Under its “worst case” or “do nothing” model 2.2 million Americans would die, as would 510,000 people in Great Britain, with the peak daily death rate hitting somewhere around late May or June. At the same time, the ICL team promised salvation from the coronavirus if only governments would listen to and adopt its technocratic recommendations. Time was of the essence to act, so President Donald Trump and UK Prime Minister Boris Johnson both listened. And so began the first year of “two weeks to flatten the curve.”

It took a little over a month before we saw conclusive evidence that something was greatly amiss with the ICL model’s underlying assumptions. A team of researchers from Uppsala University in Sweden adapted Ferguson’s work to their country and ran the projections, getting similarly catastrophic results. Over 90,000 people would die by summer from Covid-19 if Sweden did not enter immediate lockdown. Sweden never locked down though. By May it was clear that the Uppsala adaptation of ICL’s model was off by an order of magnitude. A year later, Sweden has fared no worse than the average European lockdown country, and significantly better than the UK, which acted on Ferguson’s advice.

Pressed on this unexpected result, ICL tried to distance itself from the Swedish adaptation of its model in May. The records from the March 21st supercomputer run of the Uppsala team’s projections belie that assertion, linking directly to Ferguson’s March 16th report as the framework for its modeling design. But no matter – the ICL team’s own publications would soon succumb to a real-time testing against actual data.

A second ICL report, attempting to model the reopening of the United States from lockdowns, wildly exaggerated the death tolls that were expected to follow. By July, this model too had failed to even minimally correspond to observed reality. ICL attempted to save face by publishing an absurd exercise in circular reasoning in the journal Nature where they invoked the unrealized projections of their own model to supposedly “prove” multiple millions of lives had been saved by the lockdowns. That study soon failed basic robustness checks when the ICL team’s suite of models were applied to different geographies.

Another team of Swedish researchers then noticed oddities in the ICL team’s coding, suggesting they had modified a key line to bring data from their own comparative analysis of Sweden into sync with other European countries under lockdown after the models did not align. A published derivative of this discovery showed that ICL’s own attempts to validate the effectiveness of its lockdown strategies does not withstand empirical scrutiny.

Finally, in November, another team of researchers from the United States compared a related ICL team model for a broader swath of countries against five other international models of the pandemic, examining the performance of each against observed deaths. Their results contain a stunning indictment: “The Imperial model had larger errors, about 5-fold higher than other models by six weeks. This appears to be largely driven by the aforementioned tendency to overestimate mortality.”

The verdict is in. Imperial College’s Covid-19 modeling has an abysmal track record – a characteristic it unfortunately shares with Ferguson’s prior attempts to model mad cow disease, swine flu, avian flu, and countless other pathogens.

After a year of model-driven lockdowns, we may also look back to the original March 16, 2020 report to see yet another failure of its predictive ability. Recall that this is the model that fueled the alarmist rush to shut everything down last March, all to avert a 2.2 million death toll that would presumably peak around June.

As noted above, the 2.2 million figure for the US (and corresponding 510,000 figure in Britain) were “worst case” scenarios in which the pandemic ran its course. According to the underlying theory of the ICL model, these catastrophic totals could be reduced by the adoption of NPIs – the escalating suite of social distancing measures, business and school closures, and ultimately full lockdowns that we observed in practice over the last year.

Aside from its 2.2 million worst case scenario, ICL offered no specific projections for how its proposed mitigation measures would work in the United States. Ferguson did however tell the New York Times on March 20, 2020 that a “best case” American scenario would still yield “about 1.1 million deaths,” giving us a glimpse of what he believed to be possible under NPI mitigation. The March 16th report similarly “predict[ed] there would still be in the order of…1.1-1.2 million in the US” under the most optimistic mitigation strategy, barring a large increase in hospital ICU bed capacity.

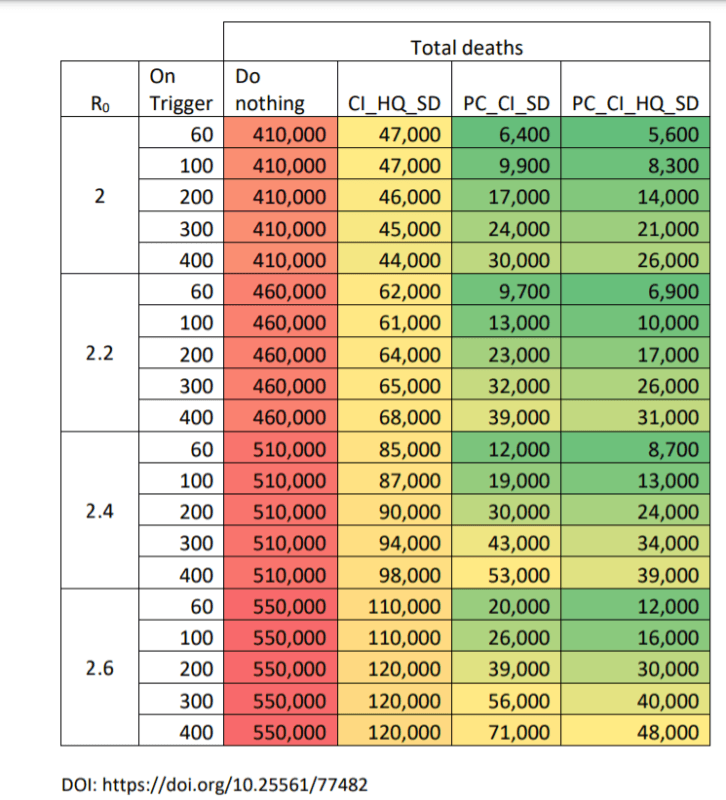

By contrast, ICL did publish an extensive table showing the results of its model run for Britain over a group of four increasingly stringent NPI scenarios. These range from the “worst case” projection with half a million deaths (the figures vary depending on assumptions about the virus’s reproduction rate) to a more stringent model where four NPIs (public school closures, case isolation, home quarantine, and social distancing) are simultaneously enacted. The results are depicted below.

Note that the UK enacted policies based on all four measures recommended by the March 16th report, as well as an even more stringently enforced general lockdown on three separate occasions. After one year of following and expanding upon the Imperial College strategy, an unusual result appears in the data: not only have the UK’s numbers come up far short of Ferguson’s most alarmist scenario (depicted in the first column), but the UK has actually done much worse than the other NPI mitigation models in the ICL report.

As of the 1-year anniversary, the UK had a little over 125,000 confirmed Covid-19 deaths. By implication, the UK death toll has exceeded the mildest of the other three NPI scenarios from the ICL model (column 2) and blown past its heavier NPI recommendations (columns 3 and 4), even while operating under a more stringent set of lockdowns than ICL originally contemplated.

The implications are clear. While Ferguson wildly exaggerated the “worst case” scenario for the UK, he also severely overestimated the effectiveness of NPIs at controlling the pandemic.

By building its policy response around the Imperial College model, the UK government delivered the worst of both worlds. It imposed some of the most severe and long-lasting lockdowns in the world based on the premise that NPIs would work as Ferguson’s team predicted, and that such actions were needed to avert a catastrophe. Except the lockdowns did not work as intended, and the UK also ended up with an abnormally high death count compared to other countries – including locales that did not lock down, or that reopened earlier and for longer periods than the UK.

Why were the Ferguson/ICL predictions so far off base on both ends? The answer likely derives from two central flaws in their model design.

First, Ferguson adapted the model directly from a 2006 influenza pandemic model that he published in the journal Nature. As with the March 16th Covid report, this study aimed to predict the spread of a virus across the general population, subject to a suite of increasingly stringent NPI countermeasures. As the second-to-last paragraph of the study reveals though, it only modeled general population spread. In doing so, the authors acknowledged that “Lack of data prevent us from reliably modelling transmission in the important contexts of residential institutions (for example, care homes, prisons) and health care settings.”

With Covid-19 however, nursing homes have emerged as one of the greatest vulnerabilities in the pandemic. In many locales, nursing home deaths alone account for almost half of all Covid-19 fatalities despite housing only a tiny fraction of the population. While the latest nursing home figures for the UK are as of yet hard to come by, reports from last year suggest they are not only a large share of the country’s Covid-19 deaths but also severely undercounted in official records. Using a preliminary count from last year, the UK had one of the worst nursing home shielding ratios in Europe – a measure that compares a country’s death toll in its care facilities to the general population. The ICL projections likely missed this problem entirely due to a defect in the 2006 model it was built upon.

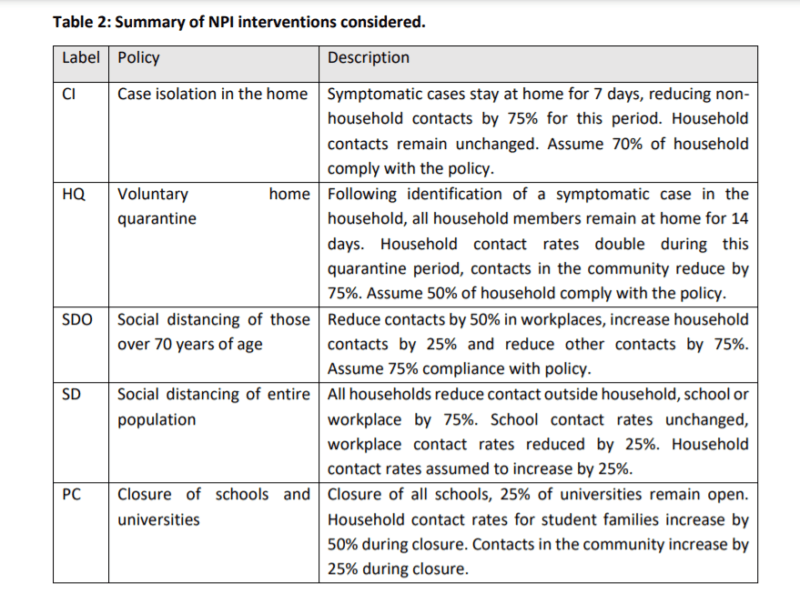

Second, Ferguson’s model severely overstated the effectiveness of NPIs at mitigating general population spread. Part of the appeal of the ICL report from last March came from its succinct portrayal of the available policy options and their claimed effects. The modelers presented political leaders with a menu of escalating measures to adopt with mathematical precision, each linked to an associated projection of its effectiveness at staving off the pandemic. All the politicians had to do was select from the menu and implement the prescribed course.

Except it wasn’t that simple in practice. ICL’s recommended NPI measures baked assumptions about their own effectiveness into the model. In reality, most of these assumptions had never been tested or even minimally quantified. As a key chart from the March 16th report illustrates, the supposed effect of each NPI was little more than a guesstimate – a set of nice, round numbers that purported to show the change in social interactions after its adoption.

A 2019 report by the World Health Organization (WHO) warned of the flimsy empirical basis for epidemiology models such as the one developed by ICL. “Simulation models provide a weak level of evidence,” the report noted, and lacked randomized controlled trials to test their assumptions. The same report designated mass quarantine measures – what we now know of as lockdowns – as “Not Recommended” due to lack of evidence for their effectiveness. Summarizing this literature, which included the same 2006 influenza model that Ferguson adapted to Covid-19, the WHO concluded: “Most of the currently available evidence on the effectiveness of quarantine on influenza control was drawn from simulation studies, which have a low strength of evidence.”

The UK’s experience under the ICL model therefore demonstrates not only Ferguson’s propensity toward wildly alarmist disease forecasting – it also illustrates the abject failure of lockdowns and related NPI measures to mitigate the pandemic. As a revealing point of comparison, the UK’s population-adjusted daily death toll under lockdowns has been consistently higher than no-lockdown Sweden for most of the pandemic, despite both countries following a nearly identical pattern of timing in both the first and second waves.

The relevant question, then, is not whether the UK failed to lock down stringently enough, but whether lockdowns offer any meaningful benefit whatsoever in mitigating the pandemic. A growing body of empirical data strongly suggests they do not.

The repeated failures of the Ferguson/ICL model point to a scientific error at the heart of the theory behind lockdowns and similar NPIs. They assume, without evidence, that their prescriptive approach is correct, and that it may be implemented by sheer will as one might achieve by clicking a check-box in a Sim City-style video game. After a year of real-time testing, it is now abundantly clear that this video game approach to pandemic management ranks among the most catastrophic public health policy failures in the last century.